4 Natural Deduction

We now develop a deductive system for propositional logic. The system will enable us to derive the conclusion of a valid argument from its premises in the language of propositional logic. These derivations will closely resemble chains of reasoning we may use to convince ourselves that a proposition follows from others in natural language. One difference, however, is that our derivations will display a very rigid structure where each step we take will be codified by a formal rule of inference. These rules of inference will in turn be closely tied to the semantic behavior of the connectives they formalize.

It will turn out that an argument formulated in the language of propositional logic is valid if, and only if, there is a derivation of the conclusion from its premises. That means that the deductive system will provide yet another approach to the study of validity in propositional logic.

There is a variety of different proof systems for propositional logic designed for different purposes. Some of them are specifically designed to facilitate the design of proofs in accordance with rules that are not dissimilar from steps we take in moore familiar contexts, whereas others minimize the rules of inference available for a derivation in order to focus on the study of the metatheory of propositional logic. Here we will focus on a system of the first type otherwise known as a natural deduction system.

One way to approach an argument is to break it down into a series of elementary inferences that take us from the premises of the argument to its conclusion. These intermediate steps are licensed by rules of inference designed to either exploit or introduce complex formulas of a certain form. Such a chain of elementary inferences will be a derivation.

- Derivation

-

A derivation is a finite sequence of formulas, some of which may be marked as premises and assumptions. Other formulas in the sequence will be licensed by the application of a rule of inference to one or more prior formulas in the series. The last formula in the sequence will be its conclusion.

This preliminary characterization of a derivation will remain incomplete until we specify the rules of inference for the system, which we will do next. Once the rules of inference are in place, we will be able to check a purported derivation to make sure that each step accords to one of the rules and that the chain of inferences qualifies as a derivation.

Derivations will take the form of a numbered series of formulas that begin with the assumptions of the argument. We do this in order to be able to refer back to prior formulas in the proof, and we will draw a horizontal line after the premises. While formulas above the line are assumptions, the formulas that follow will be explicitly licensed by the rules of inference of the system or will on occasion be another assumption, which will be labelled as such.

- Derivability

-

A formula

We will write

Natural Deduction Rules

Most natural deduction rules constrain either the introduction or the elimination of a connective in some complex formula of propositional logic. Each connective is governed by an introduction rule, which specifies the conditions under which a complex formula with that connective may be introduced into the derivation, and an elimination rule, which explains how to exploit a complex formula with that connective.

There is one important exception. We have a single structural rule, which enables us to repeat a formula from an earlier available line.

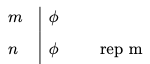

Repetition

- Repetition

-

You may repeat a formula

The annotation indicates that we are allowed to repeat the formula on line

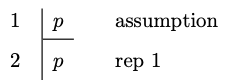

This is perhaps the simplest derivation you will ever encounter.

Example 4.1

Conjunction and Conditional

We will now specify introduction and elimination rules for conjunction and the conditional, and we will postpone the discussion of similar rules for disjunction and negation until later.

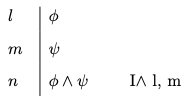

Conjunction Introduction

- Conjunction Introduction

-

You may write a conjunction

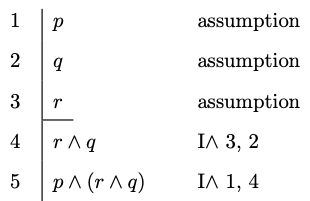

To illustrate the rule, notice how the introduction rule for conjunction suffices for a derivation of

Example 4.2

Conjunction Elimination

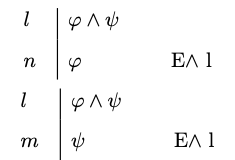

- Conjunction Elimination

-

You may write a conjunct

-

You may write a conjunct

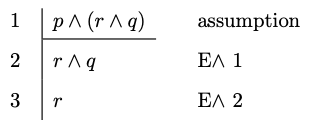

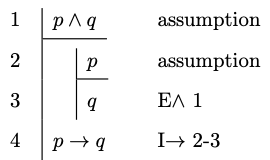

By way of illustration, note how the introduction rule for conjunction suffices for a derivation of

Example 4.3

Conditional Elimination

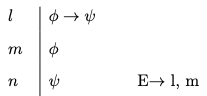

- Conditional Elimination

-

You may write

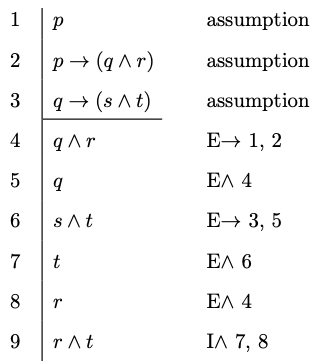

We may now produce derivations for more sophisticated arguments.

Example 4.4

Conditional Introduction

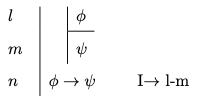

- Conditional Introduction

-

You may write

The thought behind the rule is simple. We are entitled to write tje conditional

Let us look at assorted applications of this rule:

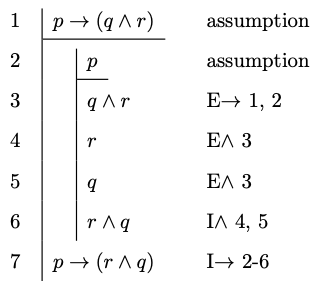

Example 4.5

Example 4.6

Disjunction and Negation

The introduction and elimination rules for disjunction and negation require more care. We start with the rules for disjunction and postpone the discussion of negation until the end.

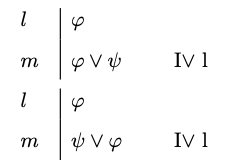

Disjunction Introduction

- Disjunction Introduction

-

You may write a disjunction

Disjunction Elimination

The thought is that a disjunction must be the case if one of its disjuncts is the case. If Pluto is a planet, then Pluto is a planet or the Sun is a planet. For remember that it all takes for a disjunction to be the case is that one of its disjuncts is the case.

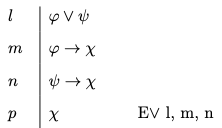

- Disjunction Elimination

-

You may write

By way of motivation, notice that if

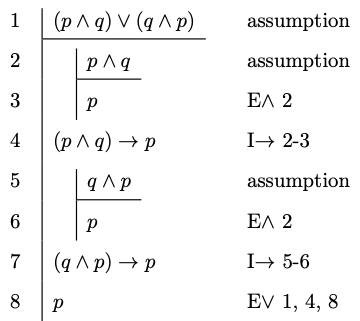

Here is an example of the elimination rule in action.

Example 4.7

We finally introduce natural deduction rules for negation, which is the last connective left to consider. To reason with negation, it will be convenient to introduce a new symbol into the deductive system:

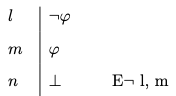

Negation Elimination

- Negation Elimination

-

You may write

Negation Introduction

One way to understand the rule is as a permission to write the symbol for absurdity in the presence of a formula and its negation.

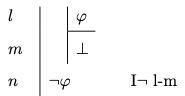

- Negation Introduction

-

You may write a negation

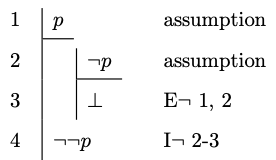

Example 4.8

This rule requires some explanation. What motivates it is the thought that we should conclude a negation of the form

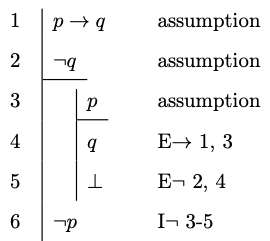

Example 4.9

Ex Falso Sequitur Quodlibet

- Ex Falso Sequitur Quodlibet

-

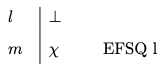

You may write any formula once

The Latin phrase Ex Falso Sequitur Quodlibet means that anything at all follows from a contradiction, and we will use the initials EFSQ to abbreviate the rule that enables to write an arbitrary formula after a contradiction.

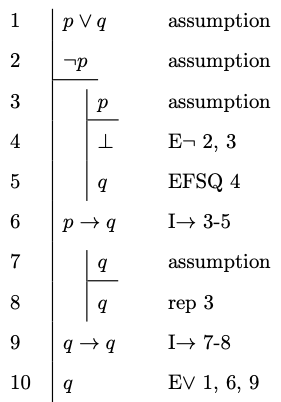

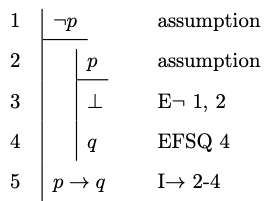

Example 4.10

Example 4.11

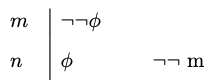

We require one more rule in order to be able to cancel a doble negation. That is, we want a rule designed to help us move from

Double Negation

- Double Negation

-

You may write a formula

Example 4.12

That completes the list of natural deduction rules for propositional logic. They suffice for the purpose of deriving a conclusion from the premises of a valid argument of propositional logic.

How to Construct Proofs

There is no algorithm we can use in order to find a proof of a conclusion from a set of given premises, but sustained practice will help you develop a sense of how to proceed in each case. There are, however, some strategies to keep in mind when you first encounter a problem.

Your plan for a proof will require you to work backwards from what you want.

If the conclusion of the argument is a conjunction

If the conclusion of the argument is a conditional

If the conclusion is a negation of the form

Once you have devised a plan for a proof, you will work forward from your premises and/or assumptions in order to fill the gaps in the arguments. The elimination rules for each connective allow you to exploit them in order to complete some of the intermediate steps from the premises and assumptions to desired conclusions.

Given a conjunction

Given a disjunction

To exploit a conditional

The case of negation

Exercises

Provide a natural deduction proof in order to justify each of the claims below: